On the Future of AI Assisted Coding

What will be the future of AI assistant coding? Will we be replaced?

I’ll limit myself to sharing a few perspectives that I found interesting… (I might keep updating this as I find more)

Simon Willison offers an optimistic perspective:

The more time I spend using LLMs for code, the less I worry for my career - even as their coding capabilities continue to improve. My job is to identify problems that can be solved with code, then solve them, then verify that the solution works and has actually addressed the problem. A more advanced LLM may eventually be able to completely handle the middle piece. It can help with the first and last pieces, but only when operated by someone who understands both the problems to be solved and how to interact with the LLM to help solve them. No matter how good these things get, they will still need someone to find problems for them to solve, define those problems and confirm that they are solved. That’s a job - one that other humans will be happy to outsource to an expert practitioner. It’s also about 80% of what I do as a software developer already.

Antirez: Human creativity still has an edge

In his blog post, Antirez discusses how human coders can still outperform LLMs on specific technical challenges:

The creativity of humans still has an edge. We are capable of really thinking outside the box, envisioning strange and imprecise solutions that can work better than others. This is something that is extremely hard for LLMs. Still, to verify all my ideas, Gemini was very useful, and maybe I started to think about the problem in such terms because I had a “smart duck” to talk with.

Andrej Karpathy: Learning from autonomous vehicle timelines

Andrej Karpathy draws parallels between AI coding assistants and autonomous vehicles, warning about overestimating timeline predictions:

We got into this car and we went for about a 30-minute drive around Palo Alto highways, streets and so on, and this drive was perfect. There were zero interventions, and this was 2013, which is now 12 years ago. It kind of struck me because at the time when I had this perfect drive, this perfect demo, I felt like “wow, self-driving is imminent” because this just worked—this is incredible. But here we are 12 years later and we are still working on autonomy. We are still working on driving agents, and even now we haven’t actually really solved the problem. You may see Waymos going around and they look driverless, but there’s still a lot of teleoperation and a lot of human in the loop.

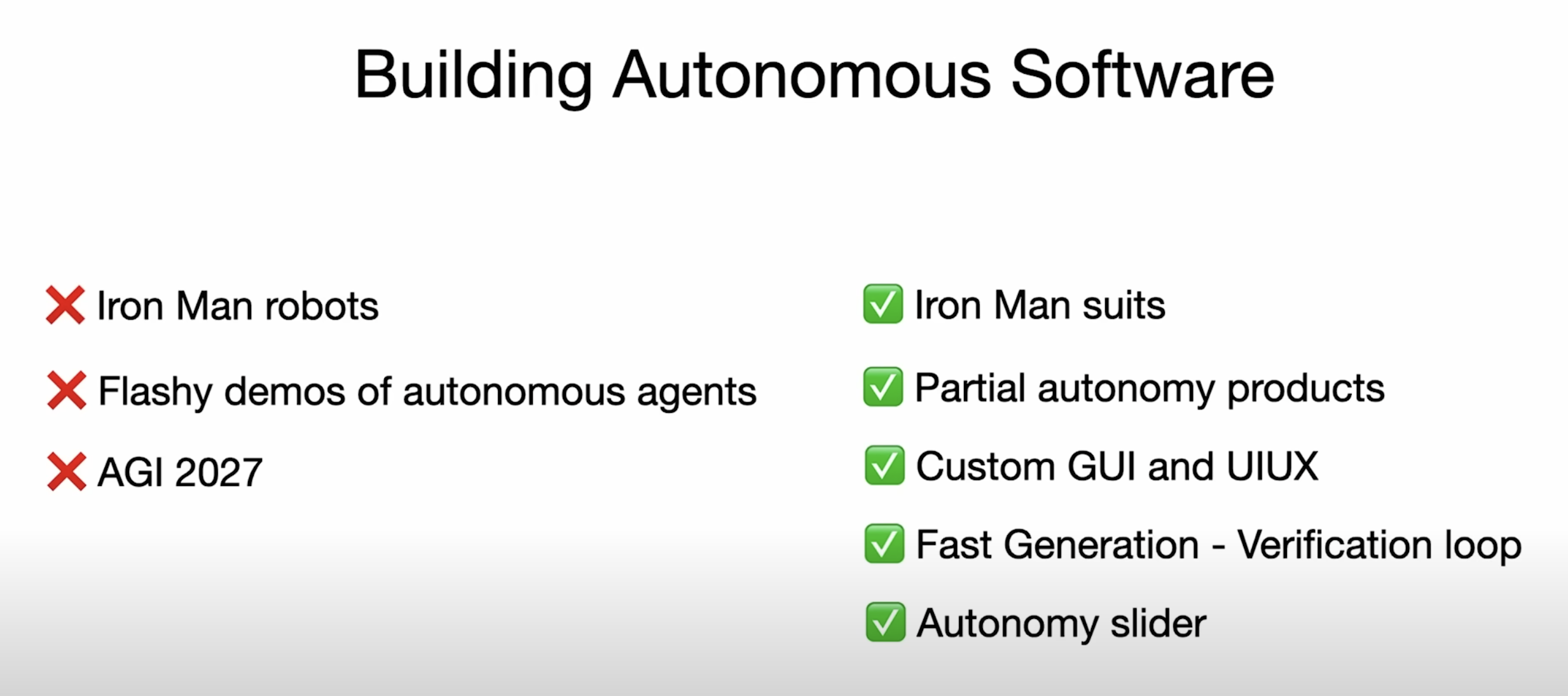

Karpathy suggests that working with AI agents should be more like an Iron Man suit - enhancing human capabilities rather than replacing them entirely: